Tor, Mastodon and the like are closed source for anyone who isn't running the servers on which the software is located. Don't let anyone hit you with the "open source!" chant and convince you that these resources, as typically consumed, have verified integrity.

Most of the people who passionately loathe technology corporations are strong advocates of open source software. Namely, software whose human-readable source code is published and therefore available for inspection, or for further development, by anyone with the ability and inclination. The official Open Source definition is a bit more specific than that, but broadly, open source software (OSS) has a transparent and communally-accessible production stream which, importantly, cannot licence against commercial use.

CLOSED-SOURCE SOFTWARE DISTRIBUTION

Software is routinely programmed in a language that humans can understand. Provided they've taken the trouble to learn the language, that is. But typically, before distribution, the software will be translated into "machine code". This translation process, known as "compiling", renders the software independent of a language interpreter program, meaning it can run as a self-contained package. The benefits are...

- The end user doesn't have to install a separate interpreter in order to run the software. They can simply install the software itself, and then run it.

- Since the interpreter is eliminated, the software typically executes faster, with lower system resource overhead.

However, there's also a major disadvantage for the user, in that the distributed "machine code" can no longer be meaningfully read by a human being. The legible source is inaccessible, or 'closed'. Therefore, an archetypal evil corporation can insert features that abuse the end user without anyone outside an NDA-gagged dev team necessarily knowing about it. The most obvious example of a secret feature today would be a surveillance aid such as a logger and backdoor combo. But even in the days before the commercial Web, software manufacturers could inject malware which might, say, tinker with the user's system settings to make the computer hostile to competitors' software.

There is no such place as softwareaudits dot com.

Open source software has built its rep as a solution to these hidden dangers. Although the finished program is still often compiled into "machine code", the original, human-readable code is freely published, enabling the general public to see precisely what the software does and does not do. At least, that's the theory.

BACKGROUND

Open source software as we know it today has its roots in a 1980s movement founded by Richard Stallman, and recognised as the Free Software Foundation (FSF). Stallman was disgruntled with the erosion of old-style, 'sciencey' software development, in which everything was openly documented and shared for the greater good. As the 'eighties headed deeper into a commercial era of concealment and restriction, he sought to re-establish that openness and transparency.

The driving force behind his quest was a so-called "viral" licencing model, which was standardised as the GNU General Public License (GPL) in 1989.

A "viral" licence is designed to enforce its terms on an ever-growing sector of the market, eventually engulfing all intellectual property in the field, and idealistically killing off other licence types as they fall into minority use. The dynamics are both clever and powerful, and this was unquestionably an attempt by Stallman to destroy the entire concept of proprietary software.

In the case of Free Software, the "viral" mechanism starts with an assertion that the code must be published, freely distributable and forever available for any random party to further develop. Including commercial enterprise. And since the "free" label means "liberated", not "exempt from cost", commercial enterprise is allowed to monetise Free Software. This was the fuel of the strategy. People really, REALLY like being given free shit to exploit in any way they see fit. Always have, always will. Businesses LOVED the idea of selling a product they didn't have to pay anyone to produce...

But if they picked the low-hanging fruit, they found it to be rich in poison. With a "viral" licence, anyone subsequently exploiting the published code in new projects is bound by a "viral" clause, which states that they MUST also licence their product under the same terms. That was the lock-in.

When you add the fuel and the lock-in together, you get a prolific spread of both the Free Software and the licence itself. It's incredibly tempting for commercial enterprise to use ready-made, free components, off the shelf. So a tech business grabs the Free Software, and then finds itself locked into "the commons". Especially because the licencing terms of Free Software prohibit any form of proprietary insert, Free Software can, despite its ambush-style offer of freebies to business, be considered deeply anti-commercial. In a nutshell, the "viral" licence forces any business further developing the software to hand all of its own code modifications to arch competitors for free re-use. Which, again, tempts more adoption of the licence.

Enterprise came to hate this. It was described within the Microsoft hierarchy as a "cancer".

THE SHIFT TOWARDS OPEN SOURCE

It wasn't just enterprises who began to object to Free Software's "viral" licencing. There were also allegations that "viral" licencing harmed developers, by creating a culture in which, beyond public goodwill (i.e. donation funding), there was no obvious way for them to earn a living.

People won't even read a 75-line privacy policy which they can fully comprehend. So the odds of them reading a 984,000-line codebase of which they have absolutely no comprehension whatsoever, are definitively nil.

True, the software could theoretically be sold. But because it could never include a proprietary insert, the definitive free version would always be identical to the definitive paid version. That rendered the paid version 100% uncompetitive per se, meaning that only the typical distribution advantages of commercial enterprise would keep it alive. With the arrival of high-capacity magazine cover disc giveaways, and then burgeoning Web access, the distribution advantages were shot. Thus, the scope for selling paid versions evaporated. By the late 1990s, Free Software was both useless to capitalists and a serious threat to their businesses. And corporations were reluctant to inject funding into Free Software, which further limited developers' earning potential.

It was at this point in time that the original Free Software concept was challenged by a new software movement: Open Source. Open Source was a pro-corporate movement which sought to divert freely-distributable software away from Stallman's anti-commercial ethos, and into a realm that expressly served businesses. To use a fairly cheap Microsoft meme, Open Source embraced Free Software, extended it, and then, at least in terms of its danger to corporate enterprise, extinguished it.

Open Source still had the basic principles of published code and communal-access development, but it diversified freely-distributable software licencing, so as to progressively move it away from "viral" conditionality. This would allow enterprises to exploit the communal software in combination with their own proprietary chunks.

This shift slowly transitioned the function of freely-distributable software development. It morphed from Stallman's original brief - a means for the end user to avoid corporate mistreatment - into a means for corporations to build exploitative tools on the cheap. With non-"virally"-licenced open source software, a company can take a fully-developed product like an operating system or a browser, graft in a piece of closed-source, proprietary spyware, and as long as they acknowledge and publish the open source portion of the code, they're hunky-dory. The only caveat is that they can't legitimately describe their software as Open Source.

TO TRUST OR NOT TO TRUST

So anything that actually is described and acknowledged as "open source" is integrity-verified, right? Unfortunately, it's not that simple.

Companies or their shills will sometimes send out a message that commercial offerings are "built on open source software". But this is not the same as the software actually being open source. For example, Google Chrome is built on the open source Chromium software, but Chrome incorporates closed-source, proprietary elements, and is therefore not, itself, open source.

But even when a piece of software does, in itself, qualify as open source, you can't always be sure of its integrity.

Whilst the full code is technically available to be audited by the public, there are some significant problems in practise...

Due to lack of coding knowledge and/or time constraints, the vast majority of the public can't audit the code themselves. Major software has many thousands, or even millions of lines of code. Let's be realistic - people won't even read a 75-line privacy policy which they can fully comprehend. So the odds of them reading a 984,000-line codebase of which they have absolutely no comprehension whatsoever, are definitively nil. Indeed, some heavily team-developed open source software is produced faster than it's possible even for a professional software auditor to audit.

If they've got a social media manager, a salaried staff, their own forum, etc, then open source or not, one way or another their product is gonna bite your ass.

And even when someone does take the trouble to audit major open source software, how does that person communicate their findings to the public? There is no such place as softwareaudits dot com. Or dot org for that matter.

The tech media is effectively captured or co-opted by brands, and will not speak against them. And the mainstream media won't care that some lump of geekware with 0.2% market share is transmitting data to Big Tech. So the biggest exposé you're likely to see is a tech geek screaming into the void on Twitter, or yelling from some Fediverse server that half the rest of the Fediverse has on a blocklist.

Simultaneously you have the tech media, and tech influencers, in the brand's pocket, singing the brand's praises. There's all sorts of flat-out bullshit from tech brands that never gets exposed to a significant audience. Not because the grapevine doesn't know about it. But because everyone with significant reach is incentivised to blind-eye it.

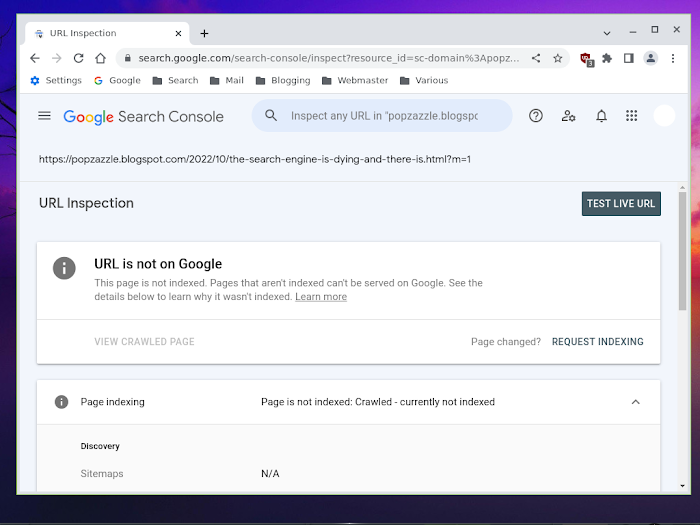

Additionally, search engines bury news that's bad for the tech industry. The whole sphere is fundamentally corrupt. For example, the most recent article on Popzazzle is called Ten Important Things You Should Tell Your Friends About 2FA. I was deliberately ambiguous with the title so as to make it look positive. Was Google interested?...

Ohhhh yeaaaaahhhh. Sounds like a nice little promo for the eminently bankable 2FA racket, that! Hence, indexed almost immediately after publishing and submission. Within minutes.

But how about The Search Engine is Dying and There is No Cure? Very unambiguously negative. So does Google, the dying search engine, want a bit o' that? Or nah?

Nah. It's now eleven days since submission, and despite a groundswell of public empathy with the subject, our Silicon Valley buddy ain't going anywhere near that one.

So in short, there's no mechanism for negative open source software audits to reach the general public. If someone audits a Linux distro and finds problems, you won't see that audit. How many open source software audits have you seen this week?... Okay, this month?... Okay, this year?... Okay, this decade?... I rest my case.

There is no direct revenue in telling people NOT to use products. Indeed, people often hate being told NOT to use products. Because now they have to find and switch to another alternative, and that's work, and they hate work, so they hate the messenger for making them work. As a coder, why would you bother auditing a hundred thousand lines of C++, on behalf of a public who can't find you, and who, even if they do somehow find you, will hate you?

The next problem is that once open source software is compiled, it's no longer transparent. You can read and audit what the developers published - if you have coding comprehension and a spare six weeks. You can't read or audit what's been compiled for use. So you now have to ask: who compiled the software? And was there an opportunity for them to change the code before they compiled it? Who runs the software? Is it under your control or someone else's?

Certainly, if open source software is being used on someone else's remote server, you have no chance at all of verifying its integrity, and you have to consider it closed-source. I alluded to this in my eye-opening examination of the Tor browser/relay package. With Tor, the "open source" designation is meaningless to the end user, because what's published as source code, and what a Tor relay operator is actually using, could be two completely different things.

Fediverse instances have exactly the same issue. You can verfiy the published, open source code for, say, Mastodon - if you have an infinite amount of free time. But when you sign up to someone else's Mastodon pod, you have no idea what modifications they might have made. So Tor, Mastodon and the like are closed source for anyone who isn't running the servers on which the software is located. Don't let anyone hit you with the "open source!" chant and convince you that these resources, as typically consumed, have verified integrity.

In summary, if you're a tech bod who can vet and compile your own software, and run your own server when the software is designed to go online, open source genuinely is a guarantee that the software meets your criteria for acceptabiity. But open source development is meaningless for the rest of us, who use the software either remotely or pre-compiled.

Sure, Linux OS downloads have a checksum verification which protects the consumer against rogue insertions by file hosts. But with a typical distro you're only downloading pre-compiled code, and you have no guarantee that the distro providers themselves are honest. For analogy, if Facebook offered a checksum process to people downloading their app, it would mean the public could trust the distribution network. But would it mean they could trust Facebook, and that Facebook could not do evil things to them? Of course not.

WHICH SOFTWARE CAN PEOPLE TRUST?

I believe the best barometer for software integrity is the level of marketing that accompanies it. Marketing is an investment, and the more investment that goes in, the greater the return the marketer will be seeking. In order to extract a return - especially from a free (as in beer) package - it's usually necessary to turn the user into a product.

So when assessing the likely trust factor of software, I tend to regard traits such as slick, pushy landing pages, social media marketing, brand blogs and/or media-schmoozing as a caution sign. The more of these trappings you see, the more investment the people behind the software are committing, and the higher monetisation will be on their agenda. Generally speaking, the easier something is to find and install, the worse it's likely to behave.

If the developers are just a small team of enthusiasts with a Git page and a donation prompt, no real marketing drive, and a product that's a pain in the neck to install - for me that's a signal of integrity. If they were onto some underhanded scheme they'd be persuading harder, and making the thing much easier to consume. Conversely, if they've got a social media manager, a salaried staff, their own forum, etc, then open source or not, one way or another their product is gonna bite your ass. It has to. They don't get their investment back if it doesn't.

Open sourcing is not a factor we should dismiss. But equally, it's not one on which the average consumer should place much importance. Its true role today is in keeping development costs down for commercial tech providers, and that was precisely what it was introduced to do. Historically, open source was not a revolution of transparency. Or even a renaissance of transparency. It was, very simply, the slayer of Stallman's anti-commercial Free Software. Capitalist by design. Capitalist in effect.